MIT researchers enable local compute on edge devices using MCUs

Two research groups at MIT have discovered a way of reducing memory usage on edge devices using microcontroller units (MCUs). This could open the door to AI tasks such as human identification being run on a greater number of inexpensive devices rather than handed off to a remote server or public cloud, opening up a whole new range of edge use cases.

The amount of processing power and memory available on MCUs is tiny — typically 256k of RAM and 1MB of storage, compared to a smartphone with 256GB RAM and terabytes of storage. So for microcontroller units, memory is a precious resource. Two MIT research groups at MIT-IBM Watson AI and the Computer Science department had the idea of profiling MCU memory usage of neural networks, and they found an imbalance in memory usage which triggered a bottleneck.

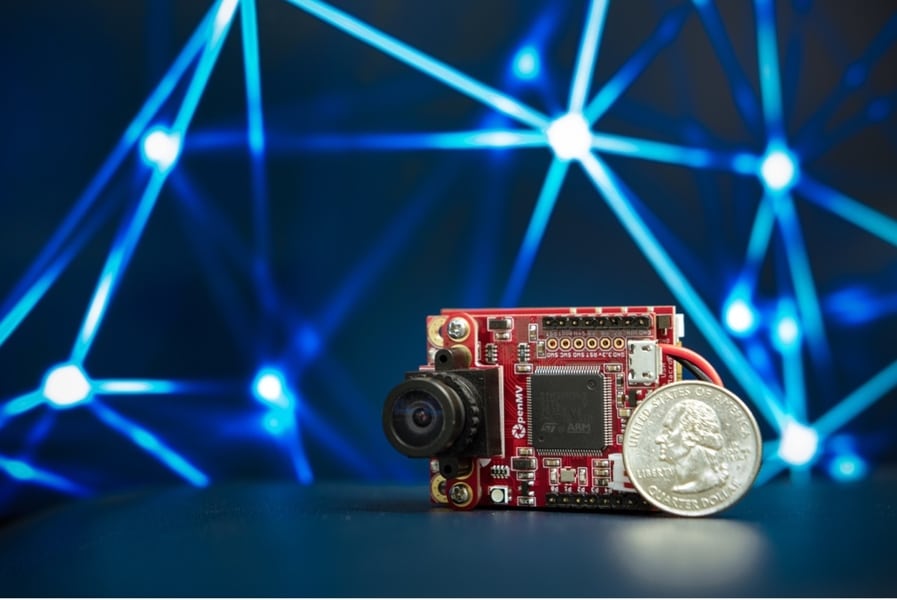

An example of an MCU implementation.

Source: MIT

With a new neural network architecture design, the teams were able to reduce memory usage at peak times by four to eight times. They then combined this with their human detection system MCUNetV2 and found it outperformed other MCUs available. This they argue will open the doors to new video recognition applications that were not possible before.

This new design of TinyML is quicker and cheaper (as IoT devices cost $1 or $2) than deep learning that is undertaken on remote servers using data gathered from sensors. There is also the advantage of being able to keep the data local rather than handed off to a public cloud for additional security.

“We really push forward for these larger-scale, real-world applications,” says Song Han, assistant professor at MIT. “Without GPUs or any specialized hardware, our technique is so tiny it can run on these small cheap IoT devices and perform real-world applications like these visual wake words, face mask detection, and person detection. This opens the door for a brand-new way of doing tiny AI and mobile vision.”

As the researchers say, it’s an important step down the path of bringing AI to the mass market.

Article Topics

academic research | computer vision | edge AI | GPU | microcontroller | MIT

Comments